by Tina on Jan. 4th, 2011

Whether you’re a nerd, a geek, a programmer, or just a regular person interested in technology, you should enjoy some serious humor, otherwise this world is very sad.

With this article you can also do something for your abs and burn off the excess Christmas treats. Start the New Year with a broad grin and lots of laughter. It’s healthy and contagious. Infect yourself with 50 hilarious geeky one-line jokes.

Logical

•There are only 10 types of people in the world: those that understand binary and those that don’t.

•Computers make very fast, very accurate mistakes.

•Be nice to the nerds, for all you know they might be the next Bill Gates!

•Artificial intelligence usually beats real stupidity.

•To err is human – and to blame it on a computer is even more so.

•CAPS LOCK – Preventing Login Since 1980.

Browsing

•The truth is out there. Anybody got the URL?

•The Internet: where men are men, women are men, and children are FBI agents.

•Some things Man was never meant to know. For everything else, there’s Google.

Operating Systems

•The box said ‘Requires Windows Vista or better’. So I installed LINUX.

•UNIX is basically a simple operating system, but you have to be a genius to understand the simplicity.

•In a world without fences and walls, who needs Gates and Windows?

•C://dos

C://dos.run

run.dos.run

•Bugs come in through open Windows.

•Penguins love cold, they wont survive the sun.

•Unix is user friendly. It’s just selective about who its friends are.

•Failure is not an option. It comes bundled with your Microsoft product.

•NT is the only OS that has caused me to beat a piece of hardware to death with my bare hands.

•My daily Unix command list: unzip; strip; touch; finger; mount; fsck; more; yes; unmount; sleep.

•Microsoft: “You’ve got questions. We’ve got dancing paperclips.”

•Erik Naggum: “Microsoft is not the answer. Microsoft is the question. NO is the answer.”

•Windows isn’t a virus, viruses do something.

•Computers are like air conditioners: they stop working when you open Windows.

•Mac users swear by their Mac, PC users swear at their PC.

Programming

•If at first you don’t succeed; call it version 1.0.

•My software never has bugs. It just develops random features.

•I would love to change the world, but they won’t give me the source code.

•The code that is the hardest to debug is the code that you know cannot possibly be wrong.

•Beware of programmers that carry screwdrivers.

•Programming today is a race between software engineers striving to build bigger and better idiot-proof programs, and the Universe trying to produce bigger and better idiots. So far, the Universe is winning.

•The beginning of the programmer’s wisdom is understanding the difference between getting program to run and having a runnable program.

•I’m not anti-social; I’m just not user friendly.

•Hey! It compiles! Ship it!

•If Ruby is not and Perl is the answer, you don’t understand the question.

•The more I C, the less I see.

•COBOL programmers understand why women hate periods.

•Michael Sinz: “Programming is like sex, one mistake and you have to support it for the rest of your life.”

•If you give someone a program, you will frustrate them for a day; if you teach them how to program, you will frustrate them for a lifetime.

•Programmers are tools for converting caffeine into code.

•My attitude isn’t bad. It’s in beta.

Ad Absurdum

•Enter any 11-digit prime number to continue.

•E-mail returned to sender, insufficient voltage.

•All wiyht. Rho sritched mg kegtops awound?

•Black holes are where God divided by zero.

•If I wanted a warm fuzzy feeling, I’d antialias my graphics!

•If brute force doesn’t solve your problems, then you aren’t using enough.

•SUPERCOMPUTER: what it sounded like before you bought it.

•Evolution is God’s way of issuing upgrades.

•Linus Torvalds: “Real men don’t use backups, they post their stuff on a public ftp server and let the rest of the world make copies.”

•Hacking is like sex. You get in, you get out, and hope that you didn’t leave something that can be traced back to you.

Calculations

•There are three kinds of people: those who can count and those who can’t.

•Latest survey shows that 3 out of 4 people make up 75% of the world’s population.

•Hand over the calculator, friends don’t let friends derive drunk.

•An infinite crowd of mathematicians enters a bar. The first one orders a pint, the second one a half pint, the third one a quarter pint… “I understand”, says the bartender – and pours two pints.

•1f u c4n r34d th1s u r34lly n33d t0 g37 l41d.

Does your belly hurt, yet? MakeUseOf has more funny resources:

• The 5 Best Joke Of The Day Sites Ever by Saikat

• 5 Websites To Get Hilarious Practical Joke Ideas by Angela

• A Brief Overview of Internet Memes & How You Can Quickly Create Your Own by Tim

• Four Funny Ways To Prank Your Parents With The Family Computer by Justin

• 6 Scary Emails To Send To Friends As A Practical Joke by Tina

• 8 Best Daily Jokes Sites To Lighten Up Your Mood by Tina

• 5 Websites With Things To Make You Smile & Light Up Your Day by Tina

What is your favorite geek one-line joke?

Sphere: Related Content

8/1/11

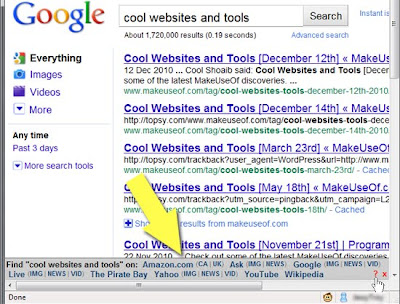

Greasemonkey Scripts

3 Greasemonkey Scripts To Jump From Google Results To Other Search Engines

by Ann Smarty on Jan. 3rd, 2011

Google is an awesome search engine and more often than not we turn to it when we need to find information on something. However Google is not the only search engine we need and use.

Very often we need more specific and targeted resources: we turn to Flickr when we need to search for creative photos, we turn to YouTube for user-created videos, we turn to reference sources to find some quick brief information on an event or word. All of these and other niche search engines won’t ever be substituted by the mighty Google.

Even Google knows that. Do you remember that when Google was being launched, it had those “quick search” links taking you to the current search results on other search engines? This post is about bringing this old and forgotten Google feature back using Greasemonkey scripts.

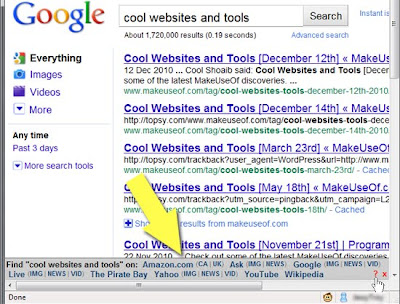

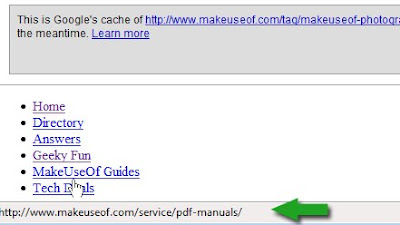

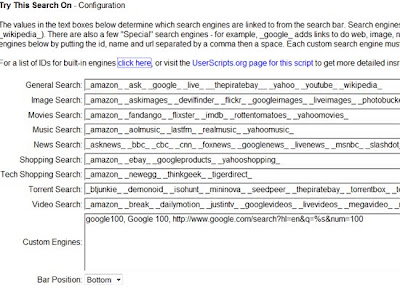

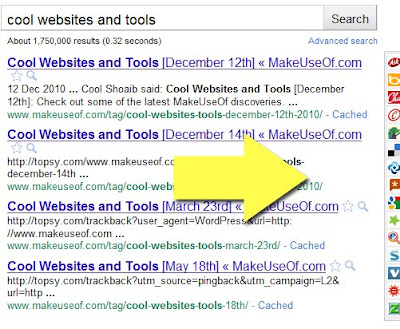

1. Try This Search On (Download the script here)

Where are the links displayed? Links to the available alternative search engines are displayed in the bar which you can choose to display at the bottom or the top of the page.

This script creates links to search results provided by the following search engines (by default):

• Amazon (US, Canada and United Kingdom);

• Ask (general, image search and news search);

• Google (general, image search, video search and news search);

• Bing (general, image search, video search and news search);

• Yahoo (general, image search, video search and news search);

• YouTube;

• Wikipedia

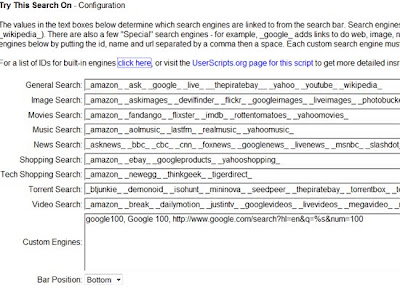

You can easily modify the list of search engines by going to the script options (click “?” in the script panel – see the screenshot above) and viewing the list of available search engine IDs:

You can easily modify the list of search engines by going to the script options (click “?” in the script panel – see the screenshot above) and viewing the list of available search engine IDs:

From the options page you can also set the bar position. You can easily hide the panel by clicking the x sign.

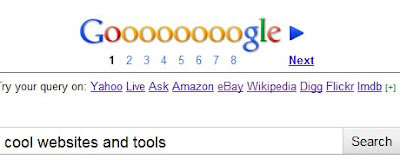

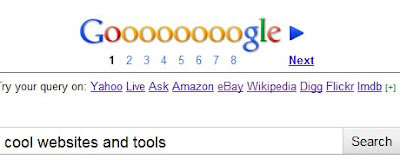

Retrolinks (Download the script here)

Where are the links displayed? Links to the available alternative search engines are displayed below the search results right under Google’s paging.

The script by a Google employee reviving an old Google search feature displays links to the following search engines by default:

• Yahoo

• Bing

• Ask

• Amazon

• eBay

• Wikipedia

• Digg (doesn’t seem to work)

• Flickr

• Imbd

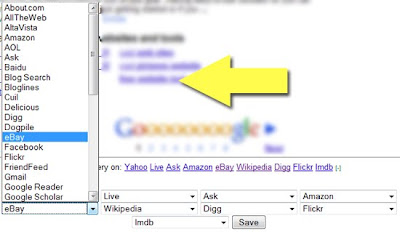

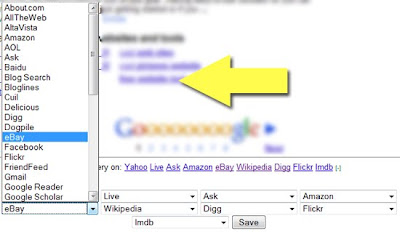

If you click + sign following the list, you’ll be able to choose the order of the search engines displayed. You’ll also be able to choose from the huge variety of databases to try your search in (there are 42 different search sites to choose from):

If you click + sign following the list, you’ll be able to choose the order of the search engines displayed. You’ll also be able to choose from the huge variety of databases to try your search in (there are 42 different search sites to choose from):

Note: the tool is quite old and I am not sure if it was ever updated, so you may find some of the search engines not working. I for one couldn’t get Digg search to work there. However you should be able to modify the script’s source code to get the one (which is really important for you) to work properly.

Note: the tool is quite old and I am not sure if it was ever updated, so you may find some of the search engines not working. I for one couldn’t get Digg search to work there. However you should be able to modify the script’s source code to get the one (which is really important for you) to work properly.

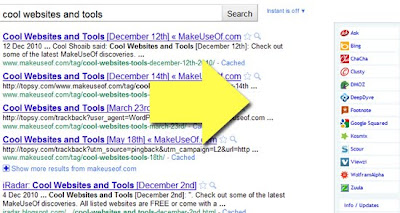

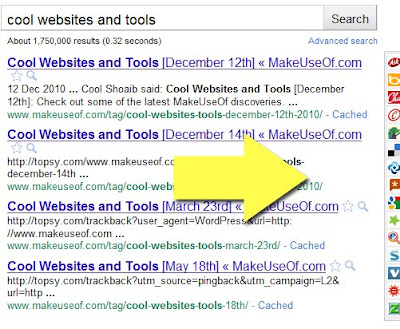

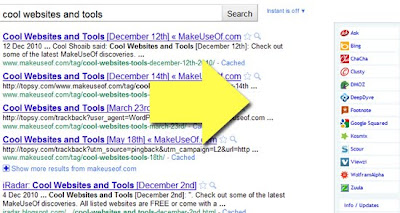

SearchJump (Download the script here)

Where are the links displayed? Links to the available alternative search engines are displayed in the sidebar to the right of search results.

Alternative search engines include:

• Ask;

• Bing;

• Dmoz;

• DeepDyve;

• WolframAlpha;

• Scour

I wasn’t able to find a way to edit the list of available search engines.

I wasn’t able to find a way to edit the list of available search engines.

If you like this one, you may want to check out its minimal version – with it, only favicons of search sites are displayed.

Do you find the option to try your searches on different search engines useful? Please share your thoughts!

Do you find the option to try your searches on different search engines useful? Please share your thoughts!

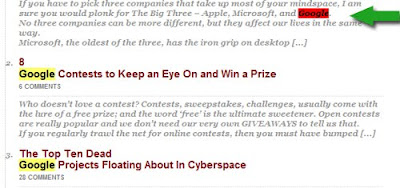

4 Great Greasemonkey Scripts To Easily Browse & Scan Through Google Cache

by Ann Smarty on Jan. 4th, 2011

Google cache is an awesome tool that can prove really handy for various purposes. For example, clicking through Google cache links from within search results pages will highlight your search terms and allow you to easier locate them on the target page.

Besides, Google cache can also be used as a proxy server to bypass office and school firewalls or access sites which are blocked in your area, restricted for registered-only users (but visible to search engines), etc. Using Google cache will also allow you to browse your competitors’ sites without leaving any trace of you being there (for that, you will need to use Google’s “Text only” version of the page).

• Not all webpages are available in Google cache. Savvy website owners may choose not to allow Google to archive their webpages by using the NOARCHIVE Robots meta tag;

• All of the below tools are userscripts. I’ve tested them all in FireFox with Greasemonkey installed. If you use Opera, Google Chrome, Safari or IE, you should be able to still use the scripts but I didn’t try them there.

So here you go: how to make the most of Google cache browsing with the following userscripts:

Browse Google Cache

If you start using Google cache, the fist thing you will notice is that it won’t let you stay for long: whenever you click any link, it will bring you outside of the cached page to the actual website. If you plan to use Google cache often, you may want to have an option to stay within it when browsing the whole website.

I for one use Google cache on a daily basis and have collected a few useful tools to make the most of it. But before we take a closer look at those tools, let me note the following:

(Note: as I have noted above, some pages may be absent in Google cache which means that sometimes you’ll end up landing on Google’s error messages).

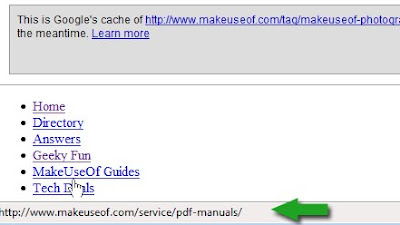

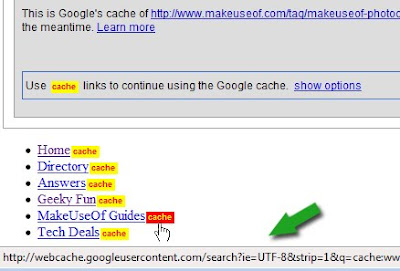

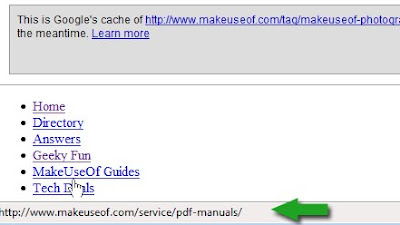

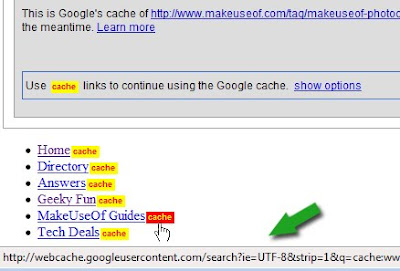

1. Google Cache Continue Redux

This script adds yellow “cache” links next to each page link within Google’s snapshot of the page. Clicking the yellow cache link opens the Google cache version of the page, allowing the user to continue browsing the site through Google’s cache.

Before:

After:

After:

Does it keep the initial search terms highlighted? – Yes!

Does it keep the initial search terms highlighted? – Yes!

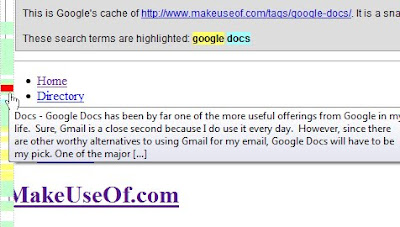

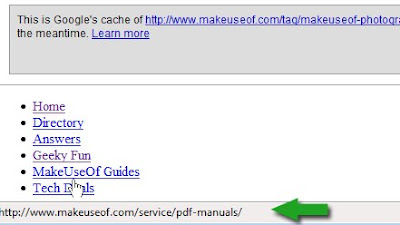

2. Google Cache Browser

This script (or this identical one) replaces the links on a cached page for Google cache links – this way you can continuously browse the website from within Google cache just like Google Cache Continue Redux but with no formatting used for Google cache links (and also giving you thus fewer options: with it, you can’t easily leave Google cache by clicking off the actual link).

Before:

After:

After:

Does it keep the initial search terms highlighted? – No

Does it keep the initial search terms highlighted? – No

Easily Scan Through The Page Cache

1. Google Cache Highlights Browser

This script makes Google cache keyboard-friendly. It creates two hotkeys (“n” for “next” and “b” for “previous”) that will automatically scroll through highlighted search words on a Google cache page:

2. Google Cache Mapper (Text-Only Version)

2. Google Cache Mapper (Text-Only Version)

This script adds a virtual scrollbar to the left of the screen that provides a quick way to find highlighted terms on a Google cached page. Google highlights your search terms using different colors and this script visualizes the matches.

You can hover over any part of the scrollbar to see the search term and its immediate context. You can then click through the different colors in the scrollbar to go to any search term occurrence:

Do you use Google cache a lot? Please share your tips and tricks!

Sphere: Related Content

Do you use Google cache a lot? Please share your tips and tricks!

Sphere: Related Content

by Ann Smarty on Jan. 3rd, 2011

Google is an awesome search engine and more often than not we turn to it when we need to find information on something. However Google is not the only search engine we need and use.

Very often we need more specific and targeted resources: we turn to Flickr when we need to search for creative photos, we turn to YouTube for user-created videos, we turn to reference sources to find some quick brief information on an event or word. All of these and other niche search engines won’t ever be substituted by the mighty Google.

Even Google knows that. Do you remember that when Google was being launched, it had those “quick search” links taking you to the current search results on other search engines? This post is about bringing this old and forgotten Google feature back using Greasemonkey scripts.

1. Try This Search On (Download the script here)

Where are the links displayed? Links to the available alternative search engines are displayed in the bar which you can choose to display at the bottom or the top of the page.

This script creates links to search results provided by the following search engines (by default):

• Amazon (US, Canada and United Kingdom);

• Ask (general, image search and news search);

• Google (general, image search, video search and news search);

• Bing (general, image search, video search and news search);

• Yahoo (general, image search, video search and news search);

• YouTube;

• Wikipedia

You can easily modify the list of search engines by going to the script options (click “?” in the script panel – see the screenshot above) and viewing the list of available search engine IDs:

You can easily modify the list of search engines by going to the script options (click “?” in the script panel – see the screenshot above) and viewing the list of available search engine IDs:

From the options page you can also set the bar position. You can easily hide the panel by clicking the x sign.

Retrolinks (Download the script here)

Where are the links displayed? Links to the available alternative search engines are displayed below the search results right under Google’s paging.

The script by a Google employee reviving an old Google search feature displays links to the following search engines by default:

• Yahoo

• Bing

• Ask

• Amazon

• eBay

• Wikipedia

• Digg (doesn’t seem to work)

• Flickr

• Imbd

If you click + sign following the list, you’ll be able to choose the order of the search engines displayed. You’ll also be able to choose from the huge variety of databases to try your search in (there are 42 different search sites to choose from):

If you click + sign following the list, you’ll be able to choose the order of the search engines displayed. You’ll also be able to choose from the huge variety of databases to try your search in (there are 42 different search sites to choose from): Note: the tool is quite old and I am not sure if it was ever updated, so you may find some of the search engines not working. I for one couldn’t get Digg search to work there. However you should be able to modify the script’s source code to get the one (which is really important for you) to work properly.

Note: the tool is quite old and I am not sure if it was ever updated, so you may find some of the search engines not working. I for one couldn’t get Digg search to work there. However you should be able to modify the script’s source code to get the one (which is really important for you) to work properly.SearchJump (Download the script here)

Where are the links displayed? Links to the available alternative search engines are displayed in the sidebar to the right of search results.

Alternative search engines include:

• Ask;

• Bing;

• Dmoz;

• DeepDyve;

• WolframAlpha;

• Scour

I wasn’t able to find a way to edit the list of available search engines.

I wasn’t able to find a way to edit the list of available search engines.If you like this one, you may want to check out its minimal version – with it, only favicons of search sites are displayed.

Do you find the option to try your searches on different search engines useful? Please share your thoughts!

Do you find the option to try your searches on different search engines useful? Please share your thoughts!4 Great Greasemonkey Scripts To Easily Browse & Scan Through Google Cache

by Ann Smarty on Jan. 4th, 2011

Google cache is an awesome tool that can prove really handy for various purposes. For example, clicking through Google cache links from within search results pages will highlight your search terms and allow you to easier locate them on the target page.

Besides, Google cache can also be used as a proxy server to bypass office and school firewalls or access sites which are blocked in your area, restricted for registered-only users (but visible to search engines), etc. Using Google cache will also allow you to browse your competitors’ sites without leaving any trace of you being there (for that, you will need to use Google’s “Text only” version of the page).

• Not all webpages are available in Google cache. Savvy website owners may choose not to allow Google to archive their webpages by using the NOARCHIVE Robots meta tag;

• All of the below tools are userscripts. I’ve tested them all in FireFox with Greasemonkey installed. If you use Opera, Google Chrome, Safari or IE, you should be able to still use the scripts but I didn’t try them there.

So here you go: how to make the most of Google cache browsing with the following userscripts:

Browse Google Cache

If you start using Google cache, the fist thing you will notice is that it won’t let you stay for long: whenever you click any link, it will bring you outside of the cached page to the actual website. If you plan to use Google cache often, you may want to have an option to stay within it when browsing the whole website.

I for one use Google cache on a daily basis and have collected a few useful tools to make the most of it. But before we take a closer look at those tools, let me note the following:

(Note: as I have noted above, some pages may be absent in Google cache which means that sometimes you’ll end up landing on Google’s error messages).

1. Google Cache Continue Redux

This script adds yellow “cache” links next to each page link within Google’s snapshot of the page. Clicking the yellow cache link opens the Google cache version of the page, allowing the user to continue browsing the site through Google’s cache.

Before:

After:

After: Does it keep the initial search terms highlighted? – Yes!

Does it keep the initial search terms highlighted? – Yes!2. Google Cache Browser

This script (or this identical one) replaces the links on a cached page for Google cache links – this way you can continuously browse the website from within Google cache just like Google Cache Continue Redux but with no formatting used for Google cache links (and also giving you thus fewer options: with it, you can’t easily leave Google cache by clicking off the actual link).

Before:

After:

After: Does it keep the initial search terms highlighted? – No

Does it keep the initial search terms highlighted? – NoEasily Scan Through The Page Cache

1. Google Cache Highlights Browser

This script makes Google cache keyboard-friendly. It creates two hotkeys (“n” for “next” and “b” for “previous”) that will automatically scroll through highlighted search words on a Google cache page:

2. Google Cache Mapper (Text-Only Version)

2. Google Cache Mapper (Text-Only Version)This script adds a virtual scrollbar to the left of the screen that provides a quick way to find highlighted terms on a Google cached page. Google highlights your search terms using different colors and this script visualizes the matches.

You can hover over any part of the scrollbar to see the search term and its immediate context. You can then click through the different colors in the scrollbar to go to any search term occurrence:

Do you use Google cache a lot? Please share your tips and tricks!

Sphere: Related Content

Do you use Google cache a lot? Please share your tips and tricks!

Sphere: Related Content

Better Than Batch: A Windows Scripting Host Tutorial

by Ryan Dube on Dec. 16th, 2010

If you’ve been working in the computer world for a while then you’re probably pretty familiar with batch jobs. IT professionals around the world utilized them to run all sorts of automated computer processing jobs and personal tasks. In fact Paul recently covered how to write such a file.

The problem with batch jobs is that they were very limited. The command set was somewhat short and didn’t allow for very much functionality when it came to structured logic using if-then, for, next and while loops.

Later, Windows Scripting Host came along. The MS Windows Scripting Host is a multi-language script utility that Microsoft started installing as standard on all PCs from Windows 98 onward. By the second generation of the tool, it was renamed to Microsoft Script Host (MSH).

A Microsoft Scripting Host Tutorial

Here at MUO, we love computer automation. For example, Varun covered Sikuli (a tool to write automation scripts) and Guy showed you how to use AutoIt to automate tasks. The cool thing about MSH is that if you have any post-Win 98 PC, you can write a “batch” script in a variety of languages.

Available languages include JScript, VBA, and VBscript. It’s also possible to write scripts in Perl, Python, PHP, Ruby or even Basic if you have the right implementation with the right scripting engine.

Personally, I know Visual Basic well, so I usually opt for VBScript. The beauty here is that you don’t need any special programming software or compiler. Just open up Notepad and write your script, just like how you wrote your batch jobs.

Without installing anything, you can write scripts in VB. The simplest script is printing text to a pop-up window, like this:

Save the file as a .vbs and Windows will recognize and run it. This is what happens when you double click on the file above:

Save the file as a .vbs and Windows will recognize and run it. This is what happens when you double click on the file above:

You can write more advanced scripts utilizing the languages you’re accustomed to. For the most flexibility, place <..job..> and <..script language=”VBScript”..> (or whatever language you choose) around each segment of code in your file, and save it as a .wsf file. This way, so long as you enclose the code in the defined script language tags, you can use multiple languages in the same file.

You can write more advanced scripts utilizing the languages you’re accustomed to. For the most flexibility, place <..job..> and <..script language=”VBScript”..> (or whatever language you choose) around each segment of code in your file, and save it as a .wsf file. This way, so long as you enclose the code in the defined script language tags, you can use multiple languages in the same file.

To show you how cool this can be, I decided to write a script that would reach out to the NIST atomic clock to check the current time. If morning, it automatically opens my Thunderbird email client. If noon, it would open my browser to CNN.com. This conditional script gives you the ability to make your computer much more intelligent. If you run this script when your PC starts up, you can make it automatically launch whatever you like depending what time of day it is.

The first part of the script goes out to the time server “http://time.nist.gov:13” and gets the current time. After formatting it correctly, it sets the computer time. Credit where credit is due, this script was adapted from TomRiddle’s excellent script over at VisualBasicScript.com. To save time, always find the example code you need online, and then tweak it to your needs.

The first part of the script goes out to the time server “http://time.nist.gov:13” and gets the current time. After formatting it correctly, it sets the computer time. Credit where credit is due, this script was adapted from TomRiddle’s excellent script over at VisualBasicScript.com. To save time, always find the example code you need online, and then tweak it to your needs.

Here’s what the script does with just the code above implemented so far.

Now that the script is working and will sync my PC every time it’s launched, it’s time to have it determine what to automatically launch depending on the time of day. In Windows Scripting Host, this task is as easy as an If-Then statement checking the hour of the day in the “Now” function, and then launching the appropriate software.

Now that the script is working and will sync my PC every time it’s launched, it’s time to have it determine what to automatically launch depending on the time of day. In Windows Scripting Host, this task is as easy as an If-Then statement checking the hour of the day in the “Now” function, and then launching the appropriate software.

When launched between 8 to 10 in the morning, this script will start up my Thunderbird email client. When run between 11am to 1pm, it’ll launch CNN.com in a browser. As you can see, just by being creating and adding a little bit of intelligence to a script file, you can do some pretty cool computer automation.

When launched between 8 to 10 in the morning, this script will start up my Thunderbird email client. When run between 11am to 1pm, it’ll launch CNN.com in a browser. As you can see, just by being creating and adding a little bit of intelligence to a script file, you can do some pretty cool computer automation.

By the way, it’s a very good idea to have a reference of scripting commands handy when you write these scripts. If you’re into VBScript like me, a great resources is ss64.com, which lists all VBScript commands alphabetically on one page.

Writing scripts alone isn’t going to automate anything, because you’ll still have to manually launch them. So to complete your automation using the Windows Script Host, go into the Task Scheduler in the control panel (administrator area) and select to create a task.

Writing scripts alone isn’t going to automate anything, because you’ll still have to manually launch them. So to complete your automation using the Windows Script Host, go into the Task Scheduler in the control panel (administrator area) and select to create a task.

The scheduler lets you launch your script upon a whole assortment of events, such as time of day or on a specific schedule, when a system event takes place, or when the computer is first booted or logged into. Here, I’m creating a scheduled task to launch my script above every time the PC starts.

The scheduler lets you launch your script upon a whole assortment of events, such as time of day or on a specific schedule, when a system event takes place, or when the computer is first booted or logged into. Here, I’m creating a scheduled task to launch my script above every time the PC starts.

This is only a very brief Windows Scripting Host tutorial. Considering the number of commands and functions available in any of these scripting languages, the possibilities to automate all sorts of cool tasks on your PC are pretty much only limited by your imagination.

This is only a very brief Windows Scripting Host tutorial. Considering the number of commands and functions available in any of these scripting languages, the possibilities to automate all sorts of cool tasks on your PC are pretty much only limited by your imagination.

Some of the best sites to find pre-written scripts that you can use or customize include the following:

• Microsoft Script Center – Straight from microsoft, and includes categories like Office, desktop, databases and active directory

• Computer Performance – This UK site offers the best selection of VBScripts that I’ve seen online.

• Computer Education – You’ll find a small collection of scripts here, but they’re very useful and they all work.

• Lab Mice – An awesome collection of batch programming resources like an assortment of logon scripts.

Have you ever used the Windows Script Host? Do you have any cool tips or examples to share? Offer your insight and share your experiences in the comments section below.

(By) Ryan, an automation engineer on the East Coast (U.S.) who enjoys discussing the latest trends of online writing and freelancing. Visit his blog at FreeWritingCenter.com to read up on the latest online writing trends and freelance money-making opportunities. Sphere: Related Content

If you’ve been working in the computer world for a while then you’re probably pretty familiar with batch jobs. IT professionals around the world utilized them to run all sorts of automated computer processing jobs and personal tasks. In fact Paul recently covered how to write such a file.

The problem with batch jobs is that they were very limited. The command set was somewhat short and didn’t allow for very much functionality when it came to structured logic using if-then, for, next and while loops.

Later, Windows Scripting Host came along. The MS Windows Scripting Host is a multi-language script utility that Microsoft started installing as standard on all PCs from Windows 98 onward. By the second generation of the tool, it was renamed to Microsoft Script Host (MSH).

A Microsoft Scripting Host Tutorial

Here at MUO, we love computer automation. For example, Varun covered Sikuli (a tool to write automation scripts) and Guy showed you how to use AutoIt to automate tasks. The cool thing about MSH is that if you have any post-Win 98 PC, you can write a “batch” script in a variety of languages.

Available languages include JScript, VBA, and VBscript. It’s also possible to write scripts in Perl, Python, PHP, Ruby or even Basic if you have the right implementation with the right scripting engine.

Personally, I know Visual Basic well, so I usually opt for VBScript. The beauty here is that you don’t need any special programming software or compiler. Just open up Notepad and write your script, just like how you wrote your batch jobs.

Without installing anything, you can write scripts in VB. The simplest script is printing text to a pop-up window, like this:

Save the file as a .vbs and Windows will recognize and run it. This is what happens when you double click on the file above:

Save the file as a .vbs and Windows will recognize and run it. This is what happens when you double click on the file above: You can write more advanced scripts utilizing the languages you’re accustomed to. For the most flexibility, place <..job..> and <..script language=”VBScript”..> (or whatever language you choose) around each segment of code in your file, and save it as a .wsf file. This way, so long as you enclose the code in the defined script language tags, you can use multiple languages in the same file.

You can write more advanced scripts utilizing the languages you’re accustomed to. For the most flexibility, place <..job..> and <..script language=”VBScript”..> (or whatever language you choose) around each segment of code in your file, and save it as a .wsf file. This way, so long as you enclose the code in the defined script language tags, you can use multiple languages in the same file.To show you how cool this can be, I decided to write a script that would reach out to the NIST atomic clock to check the current time. If morning, it automatically opens my Thunderbird email client. If noon, it would open my browser to CNN.com. This conditional script gives you the ability to make your computer much more intelligent. If you run this script when your PC starts up, you can make it automatically launch whatever you like depending what time of day it is.

The first part of the script goes out to the time server “http://time.nist.gov:13” and gets the current time. After formatting it correctly, it sets the computer time. Credit where credit is due, this script was adapted from TomRiddle’s excellent script over at VisualBasicScript.com. To save time, always find the example code you need online, and then tweak it to your needs.

The first part of the script goes out to the time server “http://time.nist.gov:13” and gets the current time. After formatting it correctly, it sets the computer time. Credit where credit is due, this script was adapted from TomRiddle’s excellent script over at VisualBasicScript.com. To save time, always find the example code you need online, and then tweak it to your needs.Here’s what the script does with just the code above implemented so far.

Now that the script is working and will sync my PC every time it’s launched, it’s time to have it determine what to automatically launch depending on the time of day. In Windows Scripting Host, this task is as easy as an If-Then statement checking the hour of the day in the “Now” function, and then launching the appropriate software.

Now that the script is working and will sync my PC every time it’s launched, it’s time to have it determine what to automatically launch depending on the time of day. In Windows Scripting Host, this task is as easy as an If-Then statement checking the hour of the day in the “Now” function, and then launching the appropriate software. When launched between 8 to 10 in the morning, this script will start up my Thunderbird email client. When run between 11am to 1pm, it’ll launch CNN.com in a browser. As you can see, just by being creating and adding a little bit of intelligence to a script file, you can do some pretty cool computer automation.

When launched between 8 to 10 in the morning, this script will start up my Thunderbird email client. When run between 11am to 1pm, it’ll launch CNN.com in a browser. As you can see, just by being creating and adding a little bit of intelligence to a script file, you can do some pretty cool computer automation.By the way, it’s a very good idea to have a reference of scripting commands handy when you write these scripts. If you’re into VBScript like me, a great resources is ss64.com, which lists all VBScript commands alphabetically on one page.

Writing scripts alone isn’t going to automate anything, because you’ll still have to manually launch them. So to complete your automation using the Windows Script Host, go into the Task Scheduler in the control panel (administrator area) and select to create a task.

Writing scripts alone isn’t going to automate anything, because you’ll still have to manually launch them. So to complete your automation using the Windows Script Host, go into the Task Scheduler in the control panel (administrator area) and select to create a task. The scheduler lets you launch your script upon a whole assortment of events, such as time of day or on a specific schedule, when a system event takes place, or when the computer is first booted or logged into. Here, I’m creating a scheduled task to launch my script above every time the PC starts.

The scheduler lets you launch your script upon a whole assortment of events, such as time of day or on a specific schedule, when a system event takes place, or when the computer is first booted or logged into. Here, I’m creating a scheduled task to launch my script above every time the PC starts. This is only a very brief Windows Scripting Host tutorial. Considering the number of commands and functions available in any of these scripting languages, the possibilities to automate all sorts of cool tasks on your PC are pretty much only limited by your imagination.

This is only a very brief Windows Scripting Host tutorial. Considering the number of commands and functions available in any of these scripting languages, the possibilities to automate all sorts of cool tasks on your PC are pretty much only limited by your imagination.Some of the best sites to find pre-written scripts that you can use or customize include the following:

• Microsoft Script Center – Straight from microsoft, and includes categories like Office, desktop, databases and active directory

• Computer Performance – This UK site offers the best selection of VBScripts that I’ve seen online.

• Computer Education – You’ll find a small collection of scripts here, but they’re very useful and they all work.

• Lab Mice – An awesome collection of batch programming resources like an assortment of logon scripts.

Have you ever used the Windows Script Host? Do you have any cool tips or examples to share? Offer your insight and share your experiences in the comments section below.

(By) Ryan, an automation engineer on the East Coast (U.S.) who enjoys discussing the latest trends of online writing and freelancing. Visit his blog at FreeWritingCenter.com to read up on the latest online writing trends and freelance money-making opportunities. Sphere: Related Content

18 Fun Interesting Facts You Never Knew About The Internet

by James Bruce on Dec. 31st, 2010

Well, it’s official. Pretty much everyone now has broadband and the majority us of use the Internet more than we watch TV. Everyone and their grandmother is on Facebook, and many of us have some kind of embarrassing moment enshrined on YouTube. But how much do you really know about the Internet revolution?

Let’s take a look at how it all began, with this list of fun facts about the Internet that you probably didn’t know already.

1. The technology behind the Internet began back in the 1960′s at MIT. The first message ever to be transmitted was LOG.. why? The user had attempted to type LOGIN, but the network crashed after the enormous load of data of the letter G. It was to be a while before Facebook would be developed…

2. The Internet began as a single page at the URL http://info.cern.ch/hypertext/WWW/TheProject.html, which contained information about this new-fangled “WorldWideWeb” project, and how you too could make a hypertext page full of wonderful hyperlinks. Sadly, the original page was never saved, but you can view it after 2 years of revisions here.

2. The Internet began as a single page at the URL http://info.cern.ch/hypertext/WWW/TheProject.html, which contained information about this new-fangled “WorldWideWeb” project, and how you too could make a hypertext page full of wonderful hyperlinks. Sadly, the original page was never saved, but you can view it after 2 years of revisions here.

3. The first emoticon is commonly credited to Kevin Mackenzie in 1979, but was a rather simple -) and didn’t really look like a face. 3 years later, was proposed by Scott Fahlman and has become the norm.

4. Did you know – the Japanese also use emoticons, but theirs are the correct way up instead of on the side, and a lot cuter! ~~~ヾ(^∇^)

5. The first webcam was deployed at Cambridge University computer lab – its sole purpose to monitor a particular coffee maker and hence avoid wasted trips to an empty pot.

6. Although the MP3 standard was invented in 1991, it wouldn’t be until 1998 that the first music file-sharing service Napster, would go live, and change the way the Internet was used forever.

6. Although the MP3 standard was invented in 1991, it wouldn’t be until 1998 that the first music file-sharing service Napster, would go live, and change the way the Internet was used forever.

7. Ever since the birth of the Internet, file sharing was a problem for the authorities that managed it. In 1989, McGill University shut down their FTP indexing site after finding out that it was responsible for half of the Internet traffic from America into Canada. Fortunately, a number of similar file indexing sites had already been made.

8. Sound familiar? Even today file sharing dominates Internet traffic with torrent files accounting for over 50% of upstream bandwidth. However, a larger proportion of download bandwidth is taken up by streaming media services such as Netflix.

9. Google estimates that the Internet today contains about 5 million terabytes of data (1TB = 1,000GB), and claims it has only indexed a paltry 0.04% of it all! You could fit the whole Internet on just 200 million Blu-Ray disks.

10.Speaking of search – One THIRD of all Internet searches are specifically for pornography. It is estimated that 80% of all images on the Internet are of naked women.

11. According to legend, Amazon became the number one shopping site because in the days before the invention of the search giant Google, Yahoo would list the sites in their directory alphabetically!

12. The first ever banner ad invaded the Internet in 1994, and it was just as bad as today. The ad was part of AT&Ts “you will” campaign, and was placed on the HotWired homepage.

13. Of the 247 BILLION email messages sent every day, 81% are pure spam.

13. Of the 247 BILLION email messages sent every day, 81% are pure spam.

14. The very first spam email was sent in 1978, when DEC released a new computer and operating system, and an innovative DEC marketeer decided to send a mass email to 600 users and administrators of the ARPANET (the precursor of the Internet). The poor sap who had typed it all in didn’t quite understand the system, and ended up typing the addresses first into the SUBJECT:, which then overflowed into the TO: field, the CC: field, and finally the email body too! The reaction of the recipients was much the same fury as users today. It wasn’t until later though that the term “spam” would be born.

15. So where does the word spam come from? One urban legend traces it back to the Multi User Dungeons of the 1980′s – primitive multiplayer adventure games where players explored and performed actions using text only. One new user felt the MUD community and experience was particularly boring, and programmed a keyboard macro to type the words SPAM SPAM SPAM SPAM SPAM repeatedly every few seconds, presumably imitating the famous Monty Python sketch about spam-loving Vikings.

16.Twenty hours of video from around the world are uploaded to YouTube every minute. The first ever YouTube video was uploaded on April 23rd 2005,by Jawed Karim (one of the founders of the site) and was 18 seconds long, entitled “Me at the zoo”. It was quite boring, as is 99% of the content on YouTube today.

17. Internet terrorism is very much a real threat. In February 2008, 5 deep-sea cables that provided Internet connectivity to the Middle East were cut. Curiously, US-occupied Iraq and Israel were unaffected.

18. The most common form of “cyber terrorism” is a DDOS, or Distributed Denial of Service attack, whereby hundreds if not thousands of systems around the world simultaneously and repeatedly connect to a website or network in order to tie up the server resources, often sending it crashing offline. Anonymous released a tool this year that users could download and set on autopilot to receive attack commands from a remote command source. Similar DDOS attacks are often performed by the use of malware installed on users computers without their knowledge.

Do you know of any other fun and interesting facts about the Internet? If so, let us know about them in the comments!

Happy New Year!

(By) James is a web developer and SEO consultant who currently lives in the quaint little English town of Surbiton with his Chinese wife. He speaks fluent Japanese and PHP, and when he isn't burning the midnight oil on MakeUseOf articles, he's burning it on iPad and iPhone board game reviews or random tech tutorials instead. Sphere: Related Content

Well, it’s official. Pretty much everyone now has broadband and the majority us of use the Internet more than we watch TV. Everyone and their grandmother is on Facebook, and many of us have some kind of embarrassing moment enshrined on YouTube. But how much do you really know about the Internet revolution?

Let’s take a look at how it all began, with this list of fun facts about the Internet that you probably didn’t know already.

1. The technology behind the Internet began back in the 1960′s at MIT. The first message ever to be transmitted was LOG.. why? The user had attempted to type LOGIN, but the network crashed after the enormous load of data of the letter G. It was to be a while before Facebook would be developed…

2. The Internet began as a single page at the URL http://info.cern.ch/hypertext/WWW/TheProject.html, which contained information about this new-fangled “WorldWideWeb” project, and how you too could make a hypertext page full of wonderful hyperlinks. Sadly, the original page was never saved, but you can view it after 2 years of revisions here.

2. The Internet began as a single page at the URL http://info.cern.ch/hypertext/WWW/TheProject.html, which contained information about this new-fangled “WorldWideWeb” project, and how you too could make a hypertext page full of wonderful hyperlinks. Sadly, the original page was never saved, but you can view it after 2 years of revisions here.3. The first emoticon is commonly credited to Kevin Mackenzie in 1979, but was a rather simple -) and didn’t really look like a face. 3 years later, was proposed by Scott Fahlman and has become the norm.

4. Did you know – the Japanese also use emoticons, but theirs are the correct way up instead of on the side, and a lot cuter! ~~~ヾ(^∇^)

5. The first webcam was deployed at Cambridge University computer lab – its sole purpose to monitor a particular coffee maker and hence avoid wasted trips to an empty pot.

6. Although the MP3 standard was invented in 1991, it wouldn’t be until 1998 that the first music file-sharing service Napster, would go live, and change the way the Internet was used forever.

6. Although the MP3 standard was invented in 1991, it wouldn’t be until 1998 that the first music file-sharing service Napster, would go live, and change the way the Internet was used forever.7. Ever since the birth of the Internet, file sharing was a problem for the authorities that managed it. In 1989, McGill University shut down their FTP indexing site after finding out that it was responsible for half of the Internet traffic from America into Canada. Fortunately, a number of similar file indexing sites had already been made.

8. Sound familiar? Even today file sharing dominates Internet traffic with torrent files accounting for over 50% of upstream bandwidth. However, a larger proportion of download bandwidth is taken up by streaming media services such as Netflix.

9. Google estimates that the Internet today contains about 5 million terabytes of data (1TB = 1,000GB), and claims it has only indexed a paltry 0.04% of it all! You could fit the whole Internet on just 200 million Blu-Ray disks.

10.Speaking of search – One THIRD of all Internet searches are specifically for pornography. It is estimated that 80% of all images on the Internet are of naked women.

11. According to legend, Amazon became the number one shopping site because in the days before the invention of the search giant Google, Yahoo would list the sites in their directory alphabetically!

12. The first ever banner ad invaded the Internet in 1994, and it was just as bad as today. The ad was part of AT&Ts “you will” campaign, and was placed on the HotWired homepage.

13. Of the 247 BILLION email messages sent every day, 81% are pure spam.

13. Of the 247 BILLION email messages sent every day, 81% are pure spam.14. The very first spam email was sent in 1978, when DEC released a new computer and operating system, and an innovative DEC marketeer decided to send a mass email to 600 users and administrators of the ARPANET (the precursor of the Internet). The poor sap who had typed it all in didn’t quite understand the system, and ended up typing the addresses first into the SUBJECT:, which then overflowed into the TO: field, the CC: field, and finally the email body too! The reaction of the recipients was much the same fury as users today. It wasn’t until later though that the term “spam” would be born.

15. So where does the word spam come from? One urban legend traces it back to the Multi User Dungeons of the 1980′s – primitive multiplayer adventure games where players explored and performed actions using text only. One new user felt the MUD community and experience was particularly boring, and programmed a keyboard macro to type the words SPAM SPAM SPAM SPAM SPAM repeatedly every few seconds, presumably imitating the famous Monty Python sketch about spam-loving Vikings.

16.Twenty hours of video from around the world are uploaded to YouTube every minute. The first ever YouTube video was uploaded on April 23rd 2005,by Jawed Karim (one of the founders of the site) and was 18 seconds long, entitled “Me at the zoo”. It was quite boring, as is 99% of the content on YouTube today.

17. Internet terrorism is very much a real threat. In February 2008, 5 deep-sea cables that provided Internet connectivity to the Middle East were cut. Curiously, US-occupied Iraq and Israel were unaffected.

18. The most common form of “cyber terrorism” is a DDOS, or Distributed Denial of Service attack, whereby hundreds if not thousands of systems around the world simultaneously and repeatedly connect to a website or network in order to tie up the server resources, often sending it crashing offline. Anonymous released a tool this year that users could download and set on autopilot to receive attack commands from a remote command source. Similar DDOS attacks are often performed by the use of malware installed on users computers without their knowledge.

Do you know of any other fun and interesting facts about the Internet? If so, let us know about them in the comments!

Happy New Year!

(By) James is a web developer and SEO consultant who currently lives in the quaint little English town of Surbiton with his Chinese wife. He speaks fluent Japanese and PHP, and when he isn't burning the midnight oil on MakeUseOf articles, he's burning it on iPad and iPhone board game reviews or random tech tutorials instead. Sphere: Related Content

7/1/11

5 Things You Might Not Know about jQuery

Submitted by davidflanagan Posted Jan 05 2011 09:31 PM

jQuery is a very powerful library, but some of its powerful features are obscure, and unless you've read the jQuery source code, or my new book jQuery Pocket Reference, you may not know about them. This article excerpts that book to describe five useful features of jQuery that you might not know about.

1. You don't have to use $(document).ready() anymore, though you'll still see plenty of code out there that does. If you want to run a function when the document is ready to be manipulated, just pass that function directly to $().

2. attr() is jQuery’s master attribute-setting function, and you can use it to set things other than normal HTML attributes. If you use the attr() method to set an attribute named “css”, “val”, “html”, “text”, “data”, “width”, “height”, or “offset”, jQuery invokes the method that has the same name as that attribute and passes whatever value you specified as the argument. The following two lines have the same effect, for example:

$('pre').attr("css", {backgroundColor:"gray"})

$('pre').css({backgroundColor:"gray"})

You probably already know that you can pass a tagname to $() to create an element of that type, and that you can pass (as a second argument) an object of attributes to be set on the newly created element. This second argument can be any object that you would pass to the attr() method, but in addition, if any of the properties have the same name as the event registration methods, the property value is taken as a handler function and is registered as a handler for the named event type. The following code, for example, creates a new element, sets three HTML attributes, and registers an event handler function on it:

var image = $("<..img..>", {

src: image_url,

alt: image_description,

className: "translucent_image",

click: function() {$(this).css("opacity", "50%");}

});

3. jQuery defines simple methods like click() and change() for registering event handlers and also defines a more general event handler registration method named bind(). An important feature of bind() is that it allows you to specify a namespace (or namespaces) for your event handlers when you register them. This allows you to define groups of handlers, which comes in handy if you later want to trigger or de-register all the handlers in a particular group. Handler namespaces are especially useful for programmers who are writing libraries or modules of reusable jQuery code. Event namespaces look like CSS class selectors. To bind an event handler in a namespace, add a period and the namespace name to the event type string:

// Bind f as a mouseover handler in namespace "myMod"

$('a').bind('mouseover.myMod', f);

If your module registered all event handlers using a namespace, you can easily use unbind() to de-register them all:

// Unbind all mouseover and mouseout handlers

// in the "myMod" namespace

$('a').unbind("mouseover.myMod mouseout.myMod");

// Unbind handlers for any event in the myMod namespace

$('a').unbind(".myMod");

4. jQuery defines simple animation functions like fadeIn(), and also defines a general-purpose animation method named animate(). The queue property of the options object you pass to animate() specifies whether the animation should be placed on a queue and deferred until pending and previously queued animations have completed. By default, animations are queued, but you can disable this by setting the queue property to false. Unqueued animations start immediately. Subsequent queued animations are not deferred for unqueued animations. Consider the following code:

$("..img..").fadeIn(500)

.animate({"width":"+=100"},

{queue:false, duration:1000})

.fadeOut(500);

The fadeIn() and fadeOut() effects are queued, but the call to animate() (which animates the width property for 1000ms) is not queued. The width animation begins at the same time the fadeIn() effect begins. The fadeOut() effect begins as soon as the fadeIn() effect ends—it does not wait for the width animation to complete.

5. jQuery fires events of type “ajaxStart” and “ajaxStop” to indicate the start and stop of Ajax-related network activity. When jQuery is not performing any Ajax requests and a new request is initiated, it fires an “ajaxStart” event. If other requests begin before this first one ends, those new requests do not cause a new “ajaxStart” event. The “ajaxStop” event is triggered when the last pending Ajax request is completed and jQuery is no longer performing any network activity. This pair of events can be useful to show and hide a “Loading...” animation or network activity icon. For example:

$("#loading_animation").bind({

ajaxStart: function() { $(this).show(); },

ajaxStop: function() { $(this).hide(); }

});

These “ajaxStart” and “ajaxStop” event handlers can be bound to any document element: jQuery triggers them globally rather than on any one particular element.

Description

jQuery is the "write less, do more" JavaScript library. Its powerful features and ease of use have made it the most popular client-side JavaScript framework for the Web. This book is jQuery's trusty companion: the definitive "read less, learn more" guide to the library. jQuery Pocket Reference explains everything you need to know about jQuery, completely and comprehensively. Sphere: Related Content

jQuery is a very powerful library, but some of its powerful features are obscure, and unless you've read the jQuery source code, or my new book jQuery Pocket Reference, you may not know about them. This article excerpts that book to describe five useful features of jQuery that you might not know about.

1. You don't have to use $(document).ready() anymore, though you'll still see plenty of code out there that does. If you want to run a function when the document is ready to be manipulated, just pass that function directly to $().

2. attr() is jQuery’s master attribute-setting function, and you can use it to set things other than normal HTML attributes. If you use the attr() method to set an attribute named “css”, “val”, “html”, “text”, “data”, “width”, “height”, or “offset”, jQuery invokes the method that has the same name as that attribute and passes whatever value you specified as the argument. The following two lines have the same effect, for example:

$('pre').attr("css", {backgroundColor:"gray"})

$('pre').css({backgroundColor:"gray"})

You probably already know that you can pass a tagname to $() to create an element of that type, and that you can pass (as a second argument) an object of attributes to be set on the newly created element. This second argument can be any object that you would pass to the attr() method, but in addition, if any of the properties have the same name as the event registration methods, the property value is taken as a handler function and is registered as a handler for the named event type. The following code, for example, creates a new element, sets three HTML attributes, and registers an event handler function on it:

var image = $("<..img..>", {

src: image_url,

alt: image_description,

className: "translucent_image",

click: function() {$(this).css("opacity", "50%");}

});

3. jQuery defines simple methods like click() and change() for registering event handlers and also defines a more general event handler registration method named bind(). An important feature of bind() is that it allows you to specify a namespace (or namespaces) for your event handlers when you register them. This allows you to define groups of handlers, which comes in handy if you later want to trigger or de-register all the handlers in a particular group. Handler namespaces are especially useful for programmers who are writing libraries or modules of reusable jQuery code. Event namespaces look like CSS class selectors. To bind an event handler in a namespace, add a period and the namespace name to the event type string:

// Bind f as a mouseover handler in namespace "myMod"

$('a').bind('mouseover.myMod', f);

If your module registered all event handlers using a namespace, you can easily use unbind() to de-register them all:

// Unbind all mouseover and mouseout handlers

// in the "myMod" namespace

$('a').unbind("mouseover.myMod mouseout.myMod");

// Unbind handlers for any event in the myMod namespace

$('a').unbind(".myMod");

4. jQuery defines simple animation functions like fadeIn(), and also defines a general-purpose animation method named animate(). The queue property of the options object you pass to animate() specifies whether the animation should be placed on a queue and deferred until pending and previously queued animations have completed. By default, animations are queued, but you can disable this by setting the queue property to false. Unqueued animations start immediately. Subsequent queued animations are not deferred for unqueued animations. Consider the following code:

$("..img..").fadeIn(500)

.animate({"width":"+=100"},

{queue:false, duration:1000})

.fadeOut(500);

The fadeIn() and fadeOut() effects are queued, but the call to animate() (which animates the width property for 1000ms) is not queued. The width animation begins at the same time the fadeIn() effect begins. The fadeOut() effect begins as soon as the fadeIn() effect ends—it does not wait for the width animation to complete.

5. jQuery fires events of type “ajaxStart” and “ajaxStop” to indicate the start and stop of Ajax-related network activity. When jQuery is not performing any Ajax requests and a new request is initiated, it fires an “ajaxStart” event. If other requests begin before this first one ends, those new requests do not cause a new “ajaxStart” event. The “ajaxStop” event is triggered when the last pending Ajax request is completed and jQuery is no longer performing any network activity. This pair of events can be useful to show and hide a “Loading...” animation or network activity icon. For example:

$("#loading_animation").bind({

ajaxStart: function() { $(this).show(); },

ajaxStop: function() { $(this).hide(); }

});

These “ajaxStart” and “ajaxStop” event handlers can be bound to any document element: jQuery triggers them globally rather than on any one particular element.

Description

jQuery is the "write less, do more" JavaScript library. Its powerful features and ease of use have made it the most popular client-side JavaScript framework for the Web. This book is jQuery's trusty companion: the definitive "read less, learn more" guide to the library. jQuery Pocket Reference explains everything you need to know about jQuery, completely and comprehensively. Sphere: Related Content

Gratuitous Hadoop: Stress Testing on the Cheap with Hadoop Streaming and EC2

January 6th, 2011 by Boris Shimanovsky

Things have a funny way of working out this way. A couple features were pushed back from a previous release and some last minute improvements were thrown in, and suddenly we found ourselves dragging out a lot more fresh code in our release than usual. All this the night before one of our heavy API users was launching something of their own. They were expecting to hit us thousands of times a second and most of their calls touched some piece of code that hadn’t been tested in the wild. Ordinarily, we would soft launch and put the system through its paces. But now we had no time for that. We really wanted to hammer the entire stack, yesterday, and so we couldn’t rely on internal compute resources.

Typically, people turn to a service for this sort of thing but for the load we wanted, they charge many hundreds of dollars. It was also short notice and after hours, so there was going to be some sort of premium to boot.

At first, I thought we should use something like JMeter from some EC2 machines. However, we didn’t have anything set up for that. What were we going to do with the stats? How would we synchronize starting and stopping? It just seemed like this path was going to take a while.

I wanted to go to bed. Soon.

We routinely run Hadoop jobs on EC2, so everything was already baked to launch a bunch of machines and run jobs. Initially, it seemed like a silly idea, but when the hammer was sitting right there, I saw nails. I Googled it to see if anyone had tried. Of course not. Why would they? And if they did, why admit it? Perhaps nobody else found themselves on the far right side of the Sleep vs Sensitivity-to-Shame scale.

So, it was settled — Hadoop it is! I asked my colleagues to assemble the list of test URLs. Along with some static stuff (no big deal) and a couple dozen basic pages, we had a broad mixture of API requests and AJAX calls we needed to simulate. For the AJAX stuff, we simply grabbed URLs from the Firebug console. Everything else was already in tests or right on the surface, so we’d have our new test set in less than an hour. I figured a few dozen lines of ruby code using Hadoop streaming could probably do what I had in mind.

So, it was settled — Hadoop it is! I asked my colleagues to assemble the list of test URLs. Along with some static stuff (no big deal) and a couple dozen basic pages, we had a broad mixture of API requests and AJAX calls we needed to simulate. For the AJAX stuff, we simply grabbed URLs from the Firebug console. Everything else was already in tests or right on the surface, so we’d have our new test set in less than an hour. I figured a few dozen lines of ruby code using Hadoop streaming could probably do what I had in mind.

I’ve read quite a few post-mortems that start off like this, but read on, it turns out alright.

Hadoop Streaming

Hadoop Streaming is a utility that ships with Hadoop that works with pretty much any language. Any executable that reads from stdin and writes to stdout can be a mapper or reducer. By default, they read line-by-line with the bytes up to the first tab character representing the key and any remainder becomes the value. It’s a great resource and bridges the power of Hadoop with the ease of quick scripts. We use it a lot when we need to scale out otherwise simple jobs.

Designing Our Job

We had just two basic requirements for our job:

• Visit URLs quickly

• Produce response time stats

Input File

The only input in this project is a list of URLs — only keys and no values. The job would have to run millions of URLs through the process to sustain the desired load for the desired time but we only had hundreds of calls that needed testing. First, we wanted to skew the URL frequency towards the most common calls. To do that, we just put those URLs in multiple times. Then we wanted to shuffle them for better distribution. Finally, we just needed lots of copies.

for i in {1..10000}; do sort -R < sample_urls_list.txt; done > full_urls_list.txt

Mapper

The mapper was going to do most of the work for us. It needed to fetch URLs as quickly as possible and record the elapsed time for each request. Hadoop processes definitely have overhead and even though each EC2 instance could likely be fetching hundreds of URLs at once, it couldn’t possibly run hundreds of mappers. To get past this issue, we had two options: 1) just launch more machines and under-utilize them or 2) fetch lots of URLs concurrently with each mapper. We’re trying not to needlessly waste money, so #1 is out.

I had used the curb gem (libcurl bindings for ruby) on several other projects and it worked really well. It turns out that it was going to be especially helpful here since it has a Multi class which can run concurrent requests each with blocks that function essentially as callbacks. With a little hackery, it could be turned into a poor/lazy/sleep-deprived man’s thread pool.

The main loop:

@curler.perform do

flush_buffer!

STDIN.take(@concurrent_requests-@curler.requests.size).each do |url|

add_url(url.chomp)

end

end

Blocks for success and failure:

curl.on_success do

if errorlike_content?(curl.body_str)

log_error(curl.url,'errorlike content')

else

@response_buffer<<[curl.url,Time.now-start_time]

end

end

curl.on_failure do |curl_obj,args|

error_type = args.first.to_s if args.is_a?(Array)

log_error(curl.url,error_type)

end

As you can see, each completion calls a block that outputs the URL and the number of seconds it took to process the request. A little healthy paranoia about thread safety resulted in the extra steps of buffering and flushing output — this would ensure we don’t interleave output coming from multiple callbacks.

If there is an error, the mapper will just emit the URL without an elapsed time as a hint to the stats aggregator. In addition, it uses the ruby “warn” method to emit a line to stderr. This increments a built-in Hadoop counter mechanism that watches stderr for messages in the following format:

reporter:counter:[group],[counter],[amount]

in this case the line is:

reporter:counter:error,[errortype],1

This is a handy way to report what’s happening while a job is in progress and is surfaced through the standard Hadoop job web interface.

Mapper-Only Implementation

The project could actually be done here if all we wanted was raw data to analyze via some stats software or a database. One could simply cat together the part files from HDFS and start crunching.

In this case, the whole job would look like this:

hadoop jar $HADOOP_HOME/hadoop-streaming.jar \

-D mapred.map.tasks=100 \

-D mapred.reduce.tasks=0 \

-D mapred.job.name="Stress Test - Mapper Only" \

-D mapred.speculative.execution=false \

-input "/mnt/hadoop/urls.txt" \

-output "/mnt/hadoop/stress_output" \

-mapper "$MY_APP_PATH/samples/stress/get_urls.rb 100"

and then when it finishes:

hadoop dfs -cat /mnt/hadoop/stress_output/part* > my_combined_data.txt

Reducer

In our case, I wanted to use the reducer to compute the stats as part of the job. In Hadoop streaming, a reducer can expect to receive lines through stdin, sorted by key and that the same key will not find its way to multiple reducers. This eliminates the requirement that the reducer code track state for more than one key at a time — it can simply do whatever it does to values associated with a key (e.g. aggregate) and move on when a new key arrives. This is a good time to mention the aggregate package which could be used as the reducer to accumulate stats. In our case, I wanted to control my mapper output as well as retain the flexibility to not run a reducer altogether and just get raw data.

The streaming job command looks like this:

hadoop jar $HADOOP_HOME/hadoop-streaming.jar \

-D mapred.map.tasks=100 \

-D mapred.reduce.tasks=8 \

-D mapred.job.name="Stress Test - Full" \

-D mapred.speculative.execution=false \

-input "/mnt/hadoop/urls.txt" \

-output "/mnt/hadoop/stress_output" \

-mapper "$MY_APP_PATH/samples/stress/get_urls.rb 100" \

-reducer “$MY_APP_PATH/samples/stress/stats_aggregator.rb --reducer”

For each key (URL) and value (elapsed time), the following variables get updated:

• sum – total time elapsed for all requests

• min – fastest response

• max – slowest response

• count – number of requests processed

key,val = l.chomp.split("\t",2)

if last_key.nil? || last_key!=key

write_stats(curr_rec) unless last_key.nil?

curr_rec = STATS_TEMPLATE.dup.update(:key=>key)

last_key=key

end

if val && val!=''

val=val.to_f

curr_rec[:sum]+=val

curr_rec[:count]+=1

curr_rec[:min]=val if curr_rec[:min].nil? || val

else

curr_rec[:errors]+=1

end

Finally, as we flush, we compute the overall average for the key.

def write_stats(stats_hash)

stats_hash[:average]=stats_hash[:sum]/stats_hash[:count] if stats_hash[:count]>0

puts stats_hash.values_at(*STATS_TEMPLATE.keys).join("\t")

end

Final Stats (optional)

When the job completes, it produces as many part files as there are total reducers. Before this data can be loaded into, say, a spreadsheet, it needs to be merged and converted into a friendly format. A few more lines of code get us a csv file that can easily be dropped into your favorite spreadsheet/charting software:

hadoop dfs -cat /mnt/hadoop/stress_output/part* | $MY_APP_PATH/samples/stress/stats_aggregator.rb --csv > final_stats.csv

Our CSV converter looks like this:

class CSVConverter

def print_stats

puts STATS_TEMPLATE.keys.to_csv

STDIN.each_line do |l|

puts l.chomp.split("\t").to_csv

end

end

end

Source Code

The mapper, get_urls.rb:

#!/usr/bin/env ruby1.9

require 'rubygems'

require 'curb'

class MultiCurler

DEFAULT_CONCURRENT_REQUESTS = 100

def initialize(opts={})

@concurrent_requests = opts[:concurrent_requests] || DEFAULT_CONCURRENT_REQUESTS

@curler = Curl::Multi.new

@response_buffer=[]

end

def start

while !STDIN.eof?

STDIN.take(@concurrent_requests).each do |url|

add_url(url.chomp)

end

run

end

end

private

def run

@curler.perform do

flush_buffer!

STDIN.take(@concurrent_requests-@curler.requests.size).each do |url|

add_url(url.chomp)

end

end

flush_buffer!

end

def flush_buffer!

while output = @response_buffer.pop

puts output.join("\t")

end

end

def add_url(u)

#skip really obvious input errors

return log_error(u,'missing url') if u.nil?

return log_error(u,'invalid url') unless u=~/^http:\/\//i

c = Curl::Easy.new(u) do|curl|

start_time = Time.now

curl.follow_location = true

curl.enable_cookies=true

curl.on_success do

if errorlike_content?(curl.body_str)

log_error(curl.url,'errorlike content')

else

@response_buffer<<[curl.url,Time.now-start_time]

end

end

curl.on_failure do |curl_obj,args|

error_type = args.first.to_s if args.is_a?(Array)

log_error(curl.url,error_type)

end

end

@curler.add(c)

end

def errorlike_content?(page_body)

page_body.nil? || page_body=='' || page_body=~/(unexpected error|something went wrong|Api::Error)/i

end

def log_error(url,error_type)

@response_buffer<<[url,nil]

warn "reporter:counter:error,#{error_type||'unknown'},1"

end

end

concurrent_requests = ARGV.first ? ARGV.first.to_i : nil

runner=MultiCurler.new(:concurrent_requests=>concurrent_requests)

runner.start

The reducer and postprocessing script, stats_aggregator.rb:

#!/usr/bin/env ruby1.9

require 'rubygems'

require 'csv'

module Stats

STATS_TEMPLATE={:key=>nil,:sum=>0,:average=>nil,:max=>nil,:min=>nil,:errors=>0,:count=>0}

class Reducer

def run

last_key=nil

curr_rec=nil

STDIN.each_line do |l|

key,val = l.chomp.split("\t",2)

if last_key.nil? || last_key!=key

write_stats(curr_rec) unless last_key.nil?

curr_rec = STATS_TEMPLATE.dup.update(:key=>key)

last_key=key

end

if val && val!=''

val=val.to_f

curr_rec[:sum]+=val

curr_rec[:count]+=1

curr_rec[:min]=val if curr_rec[:min].nil? || val

else

curr_rec[:errors]+=1

end

end

write_stats(curr_rec) if curr_rec

end

private

def write_stats(stats_hash)

stats_hash[:average]=stats_hash[:sum]/stats_hash[:count] if stats_hash[:count]>0

puts stats_hash.values_at(*STATS_TEMPLATE.keys).join("\t")

end

end

class CSVConverter

def print_stats

puts STATS_TEMPLATE.keys.to_csv

STDIN.each_line do |l|

puts l.chomp.split("\t").to_csv

end

end

end

end

mode = ARGV.shift

case mode

when '--reducer' #hadoop mode

Stats::Reducer.new.run

when '--csv' #csv converter; run with: hadoop dfs -cat /mnt/hadoop/stress_output/part* | stats_aggregator.rb --csv

Stats::CSVConverter.new.print_stats

else

abort 'Invalid mode specified for stats aggregator. Valid options are --reducer, --csv'

end

Reckoning (Shame Computation)

In a moment of desperation, we used Hadoop to solve a problem for which it is very tenuously appropriate, but it actually turned out great. I wrote very little code and it worked with almost no iteration and just a couple of up-front hours invested for an easily repeatable process. Our last minute stress test exposed a few issues that we were able to quickly correct and resulted in a smooth launch. All this, and it only cost us about $10 of EC2 time.

Hadoop Streaming is a powerful tool that every Hadoop shop, even the pure Java shops, should consider part of their toolbox. Lots of big data jobs are actually simple except for scale, so your local python, ruby, bash, perl, or whatever coder along with some EC2 dollars can give you access to some pretty powerful stuff. Sphere: Related Content

Things have a funny way of working out this way. A couple features were pushed back from a previous release and some last minute improvements were thrown in, and suddenly we found ourselves dragging out a lot more fresh code in our release than usual. All this the night before one of our heavy API users was launching something of their own. They were expecting to hit us thousands of times a second and most of their calls touched some piece of code that hadn’t been tested in the wild. Ordinarily, we would soft launch and put the system through its paces. But now we had no time for that. We really wanted to hammer the entire stack, yesterday, and so we couldn’t rely on internal compute resources.

Typically, people turn to a service for this sort of thing but for the load we wanted, they charge many hundreds of dollars. It was also short notice and after hours, so there was going to be some sort of premium to boot.

At first, I thought we should use something like JMeter from some EC2 machines. However, we didn’t have anything set up for that. What were we going to do with the stats? How would we synchronize starting and stopping? It just seemed like this path was going to take a while.

I wanted to go to bed. Soon.